OneLayerFFNN Class Reference

simple one layer neural network with configurable activation function More...

#include <onelayerffnn.h>

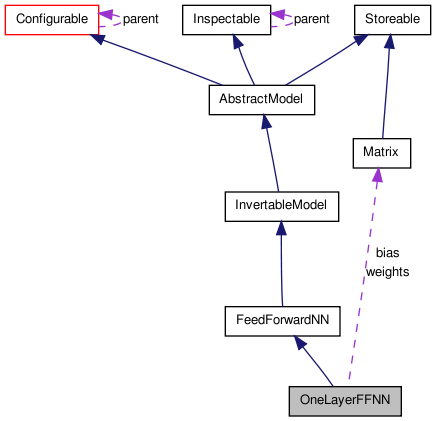

Inherits FeedForwardNN.

Public Member Functions | |

| OneLayerFFNN (double eps, double factor_bias=0.1, const std::string &name="OneLayerFFN", const std::string &revision="$Id: onelayerffnn.h,v 1.10 2011/05/30 13:52:54 martius Exp $") | |

| Uses linear activation function. | |

| OneLayerFFNN (double eps, double factor_bias, ActivationFunction actfun, ActivationFunction dactfun, const std::string &name="OneLayerFFN", const std::string &revision="$Id: onelayerffnn.h,v 1.10 2011/05/30 13:52:54 martius Exp $") | |

| virtual | ~OneLayerFFNN () |

| virtual void | init (unsigned int inputDim, unsigned int outputDim, double unit_map=0.0, RandGen *randGen=0) |

| initialisation of the network with the given number of input and output units | |

| virtual const matrix::Matrix | process (const matrix::Matrix &input) |

| passive processing of the input | |

| virtual const matrix::Matrix | learn (const matrix::Matrix &input, const matrix::Matrix &nom_output, double learnRateFactor=1) |

| performs learning and returns the network output before learning | |

| virtual unsigned int | getInputDim () const |

| returns the number of input neurons | |

| virtual unsigned int | getOutputDim () const |

| returns the number of output neurons | |

| virtual const matrix::Matrix & | getWeights () const |

| virtual const matrix::Matrix & | getBias () const |

| virtual void | damp (double damping) |

| damps the weights and the biases by multiplying (1-damping) | |

| bool | store (FILE *f) const |

| stores the layer binary into file stream | |

| bool | restore (FILE *f) |

| restores the layer binary from file stream | |

Detailed Description

simple one layer neural network with configurable activation function

Constructor & Destructor Documentation

| OneLayerFFNN | ( | double | eps, | |

| double | factor_bias = 0.1, |

|||

| const std::string & | name = "OneLayerFFN", |

|||

| const std::string & | revision = "$Id: onelayerffnn.h,v 1.10 2011/05/30 13:52:54 martius Exp $" | |||

| ) | [inline] |

Uses linear activation function.

- Parameters:

-

eps learning rate factor_bias learning rate factor for bias learning

| OneLayerFFNN | ( | double | eps, | |

| double | factor_bias, | |||

| ActivationFunction | actfun, | |||

| ActivationFunction | dactfun, | |||

| const std::string & | name = "OneLayerFFN", |

|||

| const std::string & | revision = "$Id: onelayerffnn.h,v 1.10 2011/05/30 13:52:54 martius Exp $" | |||

| ) | [inline] |

- Parameters:

-

eps learning rate factor_bias learning rate factor for bias learning actfun callback activation function (see FeedForwardNN) dactfun callback for first derivative of the activation function

| virtual ~OneLayerFFNN | ( | ) | [inline, virtual] |

Member Function Documentation

| virtual void damp | ( | double | damping | ) | [inline, virtual] |

damps the weights and the biases by multiplying (1-damping)

Implements FeedForwardNN.

| virtual const matrix::Matrix& getBias | ( | ) | const [inline, virtual] |

| virtual unsigned int getInputDim | ( | ) | const [inline, virtual] |

returns the number of input neurons

Implements AbstractModel.

| virtual unsigned int getOutputDim | ( | ) | const [inline, virtual] |

returns the number of output neurons

Implements AbstractModel.

| virtual const matrix::Matrix& getWeights | ( | ) | const [inline, virtual] |

| void init | ( | unsigned int | inputDim, | |

| unsigned int | outputDim, | |||

| double | unit_map = 0.0, |

|||

| RandGen * | randGen = 0 | |||

| ) | [virtual] |

initialisation of the network with the given number of input and output units

Implements AbstractModel.

| const Matrix learn | ( | const matrix::Matrix & | input, | |

| const matrix::Matrix & | nom_output, | |||

| double | learnRateFactor = 1 | |||

| ) | [virtual] |

performs learning and returns the network output before learning

Implements AbstractModel.

| const Matrix process | ( | const matrix::Matrix & | input | ) | [virtual] |

passive processing of the input

Implements AbstractModel.

| bool restore | ( | FILE * | f | ) | [virtual] |

restores the layer binary from file stream

Implements Storeable.

| bool store | ( | FILE * | f | ) | const [virtual] |

stores the layer binary into file stream

Implements Storeable.

The documentation for this class was generated from the following files:

1.6.3

1.6.3