#include <qlearning.h>

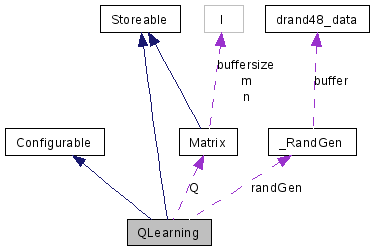

Inherits Configurable, and Storeable.

Inheritance diagram for QLearning:

Public Member Functions | |

| QLearning (double eps, double discount, double exploration, int eligibility, bool random_initQ=false, bool useSARSA=false, int tau=1000) | |

| virtual | ~QLearning () |

| virtual void | init (unsigned int stateDim, unsigned int actionDim, RandGen *randGen=0) |

| initialisation with the given number of action and states | |

| virtual unsigned int | select (unsigned int state) |

| selection of action given current state. | |

| virtual unsigned int | select_sample (unsigned int state) |

| selection of action given current state. | |

| virtual unsigned int | select_keepold (unsigned int state) |

| select with preference to old (90% if good) and 30% second best | |

| virtual double | learn (unsigned int state, unsigned int action, double reward, double learnRateFactor=1) |

| matrix::Matrix | getActionValues (unsigned int state) |

| returns the vector of values for all actions given the current state | |

| virtual void | reset () |

| tells the q learning that the agent was reset, so that it forgets it memory. | |

| virtual unsigned int | getStateDim () const |

| returns the number of states | |

| virtual unsigned int | getActionDim () const |

| returns the number of actions | |

| virtual double | getCollectedReward () const |

| returns the collectedReward reward | |

| virtual const matrix::Matrix & | getQ () const |

| returns q table (mxn) == (states x actions) | |

| virtual bool | store (FILE *f) const |

| stores the object to the given file stream (binary). | |

| virtual bool | restore (FILE *f) |

| loads the object from the given file stream (binary). | |

Static Public Member Functions | |

| static int | valInCrossProd (const std::list< std::pair< int, int > > &vals) |

| expects a list of value,range and returns the associated state | |

| static std::list< int > | ConfInCrossProd (const std::list< int > &ranges, int val) |

| expects a list of ranges and a state/action and return the configuration | |

Public Attributes | |

| bool | useSARSA |

| if true, use SARSA strategy otherwise qlearning | |

Protected Attributes | |

| double | eps |

| double | discount |

| double | exploration |

| double | eligibility |

| bool | random_initQ |

| int | tau |

| time horizont for averaging the reward | |

| matrix::Matrix | Q |

| int * | actions |

| < Q table (mxn) == (states x actions) | |

| int * | states |

| double * | rewards |

| int | ringbuffersize |

| double * | longrewards |

| int | t |

| bool | initialised |

| double | collectedReward |

| RandGen * | randGen |

| QLearning | ( | double | eps, | |

| double | discount, | |||

| double | exploration, | |||

| int | eligibility, | |||

| bool | random_initQ = false, |

|||

| bool | useSARSA = false, |

|||

| int | tau = 1000 | |||

| ) |

| eps | learning rate (typically 0.1) | |

| discount | discount factor for Q-values (typically 0.9) | |

| exploration | exploration rate (typically 0.02) | |

| eligibility | number of steps to update backwards in time | |

| random_initQ | if true Q table is filled with small random numbers at the start (default: false) | |

| useSARSA | if true, use SARSA strategy otherwise qlearning (default: false) | |

| tau | number of time steps to average over reward for col_rew |

| ~QLearning | ( | ) | [virtual] |

| std::list< int > ConfInCrossProd | ( | const std::list< int > & | ranges, | |

| int | val | |||

| ) | [static] |

expects a list of ranges and a state/action and return the configuration

| unsigned int getActionDim | ( | ) | const [virtual] |

returns the number of actions

| matrix::Matrix getActionValues | ( | unsigned int | state | ) |

returns the vector of values for all actions given the current state

| double getCollectedReward | ( | ) | const [virtual] |

returns the collectedReward reward

| virtual const matrix::Matrix& getQ | ( | ) | const [inline, virtual] |

returns q table (mxn) == (states x actions)

| unsigned int getStateDim | ( | ) | const [virtual] |

returns the number of states

| void init | ( | unsigned int | stateDim, | |

| unsigned int | actionDim, | |||

| RandGen * | randGen = 0 | |||

| ) | [virtual] |

initialisation with the given number of action and states

| actionDim | number of actions | |

| stateDim | number of states | |

| unit_map | if 0 the parametes are choosen randomly. Otherwise the model is initialised to represent a unit_map with the given response strength. |

| double learn | ( | unsigned int | state, | |

| unsigned int | action, | |||

| double | reward, | |||

| double | learnRateFactor = 1 | |||

| ) | [virtual] |

| void reset | ( | ) | [virtual] |

tells the q learning that the agent was reset, so that it forgets it memory.

please note, that updating the Q-table is one step later, so in case of a reward you should call learn one more time before reset.

| bool restore | ( | FILE * | f | ) | [virtual] |

| unsigned int select | ( | unsigned int | state | ) | [virtual] |

selection of action given current state.

The policy is to take the actions with the highest value, or a random action at the rate of exploration

| unsigned int select_keepold | ( | unsigned int | state | ) | [virtual] |

select with preference to old (90% if good) and 30% second best

| unsigned int select_sample | ( | unsigned int | state | ) | [virtual] |

selection of action given current state.

The policy is to sample from the above average actions, with bias to the old action (also exploration included).

| bool store | ( | FILE * | f | ) | const [virtual] |

| int valInCrossProd | ( | const std::list< std::pair< int, int > > & | vals | ) | [static] |

expects a list of value,range and returns the associated state

int* actions [protected] |

< Q table (mxn) == (states x actions)

double collectedReward [protected] |

double discount [protected] |

double eligibility [protected] |

double eps [protected] |

double exploration [protected] |

bool initialised [protected] |

double* longrewards [protected] |

matrix::Matrix Q [protected] |

bool random_initQ [protected] |

double* rewards [protected] |

int ringbuffersize [protected] |

int* states [protected] |

int t [protected] |

int tau [protected] |

time horizont for averaging the reward

| bool useSARSA |

if true, use SARSA strategy otherwise qlearning

1.4.7

1.4.7