#include <elman.h>

Inherits MultiLayerFFNN.

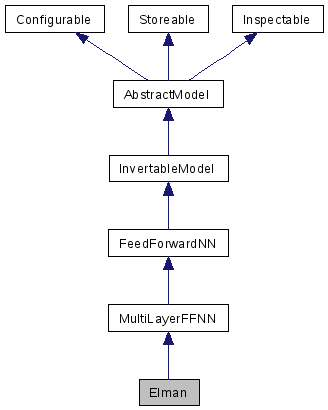

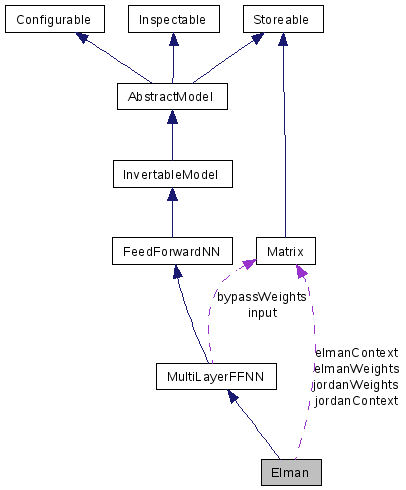

Inheritance diagram for Elman:

Public Member Functions | |

| Elman (double eps, const std::vector< Layer > &layers, bool useElman, bool useJordan=false, bool useBypass=false) | |

| virtual | ~Elman () |

| virtual void | init (unsigned int inputDim, unsigned int outputDim, double unit_map=0.0, RandGen *randGen=0) |

| initialisation of the network with the given number of input and output units | |

| virtual const matrix::Matrix | process (const matrix::Matrix &input) |

| passive processing of the input (this will be different for every input, since it is a recurrent network) | |

| virtual const matrix::Matrix | learn (const matrix::Matrix &input, const matrix::Matrix &nom_output, double learnRateFactor=1) |

| performs learning and returns the network output before learning | |

| virtual NetUpdate | weightIncrement (const matrix::Matrix &xsi) |

| determines the weight and bias updates | |

| virtual NetUpdate | weightIncrementBlocked (const matrix::Matrix &xsi_, int blockedlayer, int blockfrom, int blockto) |

| like weightIncrement but with blocked backprop flow for some neurons. | |

| virtual void | updateWeights (const NetUpdate &updates) |

| applies the weight increments to the weight (and bias) matrices with the learningrate and the learnRateFactor | |

| void | damp (double damping) |

| damps the weights and the biases by multiplying (1-damping) | |

| bool | store (FILE *f) const |

| stores the layer binary into file stream | |

| bool | restore (FILE *f) |

| restores the layer binary from file stream | |

| virtual paramkey | getName () const |

| return the name of the object | |

| virtual iparamkeylist | getInternalParamNames () const |

| The list of the names of all internal parameters given by getInternalParams(). | |

| virtual iparamvallist | getInternalParams () const |

| virtual ilayerlist | getStructuralLayers () const |

| Specifies which parameter vector forms a structural layer (in terms of a neural network) The ordering is important. | |

| virtual iconnectionlist | getStructuralConnections () const |

| Specifies which parameter matrix forms a connection between layers (in terms of a neural network) The orderning is not important. | |

Protected Attributes | |

| matrix::Matrix | elmanWeights |

| matrix::Matrix | elmanContext |

| matrix::Matrix | jordanWeights |

| matrix::Matrix | jordanContext |

| bool | useElman |

| bool | useJordan |

| Elman | ( | double | eps, | |

| const std::vector< Layer > & | layers, | |||

| bool | useElman, | |||

| bool | useJordan = false, |

|||

| bool | useBypass = false | |||

| ) | [inline] |

| eps | learning rate | |

| layers | Layer description (the input layer is not specified (always linear)) | |

| lambda | self-recurrent feedback strength of context neurons |

| virtual ~Elman | ( | ) | [inline, virtual] |

| void damp | ( | double | damping | ) | [virtual] |

| Inspectable::iparamkeylist getInternalParamNames | ( | ) | const [virtual] |

The list of the names of all internal parameters given by getInternalParams().

The naming convention is "v[i]" for vectors and "A[i][j]" for matrices, where i, j start at 0.

Reimplemented from MultiLayerFFNN.

| Inspectable::iparamvallist getInternalParams | ( | ) | const [virtual] |

| virtual paramkey getName | ( | ) | const [inline, virtual] |

| Inspectable::iconnectionlist getStructuralConnections | ( | ) | const [virtual] |

Specifies which parameter matrix forms a connection between layers (in terms of a neural network) The orderning is not important.

Reimplemented from MultiLayerFFNN.

| Inspectable::ilayerlist getStructuralLayers | ( | ) | const [virtual] |

Specifies which parameter vector forms a structural layer (in terms of a neural network) The ordering is important.

The first entry is the input layer and so on.

Reimplemented from MultiLayerFFNN.

| void init | ( | unsigned int | inputDim, | |

| unsigned int | outputDim, | |||

| double | unit_map = 0.0, |

|||

| RandGen * | randGen = 0 | |||

| ) | [virtual] |

initialisation of the network with the given number of input and output units

Reimplemented from MultiLayerFFNN.

| const Matrix learn | ( | const matrix::Matrix & | input, | |

| const matrix::Matrix & | nom_output, | |||

| double | learnRateFactor = 1 | |||

| ) | [virtual] |

| const Matrix process | ( | const matrix::Matrix & | input | ) | [virtual] |

passive processing of the input (this will be different for every input, since it is a recurrent network)

Reimplemented from MultiLayerFFNN.

| bool restore | ( | FILE * | f | ) | [virtual] |

| bool store | ( | FILE * | f | ) | const [virtual] |

| void updateWeights | ( | const NetUpdate & | updates | ) | [virtual] |

applies the weight increments to the weight (and bias) matrices with the learningrate and the learnRateFactor

| NetUpdate weightIncrement | ( | const matrix::Matrix & | xsi | ) | [virtual] |

determines the weight and bias updates

| NetUpdate weightIncrementBlocked | ( | const matrix::Matrix & | xsi_, | |

| int | blockedlayer, | |||

| int | blockfrom, | |||

| int | blockto | |||

| ) | [virtual] |

like weightIncrement but with blocked backprop flow for some neurons.

| blockedlayer | index of layer with blocked neurons | |

| blockfrom | index of neuron in blockedlayer to start blocking | |

| blockto | index of neuron in blockedlayer to end blocking (if -1 then to end) (not included) |

matrix::Matrix elmanContext [protected] |

matrix::Matrix elmanWeights [protected] |

matrix::Matrix jordanContext [protected] |

matrix::Matrix jordanWeights [protected] |

bool useElman [protected] |

bool useJordan [protected] |

1.4.7

1.4.7